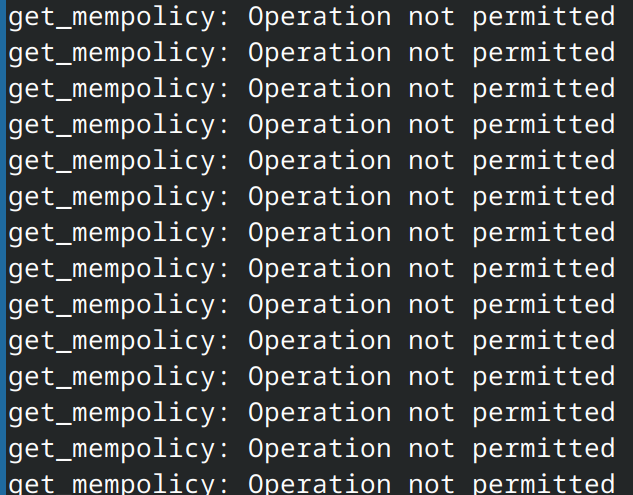

I’ve had great success with ClickHouse on a 4GB RAM server (shared with other things, full load) but not so on my small server with only 2GB RAM and 2GB swap. I’m having some trouble lately with running ClickHouse, along with 5 or so other services like Kafka, Zookeeper, two python backends and a Node/Svelte frontend all in a docker compose. The issue I get is get_mempolicy: Operation not permitted this get_mempolicy issue has recently had some fixes but I seem to still be getting it spamming my ClickHouse logs when ClickHouse is out of memory.

Let’s check one of the Python backends, here the error from ClickHouse is preserved and can see it’s obviously run out of some kind of memory. The system currently has 2GB of RAM, 2GB of swap and is using about 1GB of each. Nothing is really running, but each service has it’s own overhead.

clickhouse_connect.driver.exceptions.DatabaseError: HTTPDriver for http://clickhouse:8123 returned response code 500) Code: 241. DB::Exception: Memory limit (total) exceeded: would use 2.64 GiB (attempt to allocate chunk of 0 bytes), current RSS 706.06 MiB, maximum: 1.70 GiB. OvercommitTracker decision: Query was selected to stop by OvercommitTracker.: While executing AggregatingTransform. (MEMORY_LIMIT_EXCEEDED) (version 24.10.2.80 (official build))

Tune ClickHouse for Low Memory

ClickHouse specifically mentions two ways of tuning for low-memory:

cache_size_to_ram_max_ratio: Set cache size to RAM max ratio. Allows lowering the cache size on low-memory systems. (so lower is better)

max_server_memory_usage_to_ram_ratio: On hosts with low RAM and swap, you possibly need setting max_server_memory_usage_to_ram_ratio larger than 1. (so higher is better) I’ve seen one project with this set to 100, but for now I’m setting this at 2.

mark_cache_size This one feels a bit like a nuclear option since it is hardcoding the size, but I’ve seen people using it. The size of the protected queue in the mark cache relative to the cache’s total size.

<?xml version="1.0"?> <clickhouse> <!-- https://clickhouse.com/docs/en/operations/server-configuration-parameters/settings#cache_size_to_ram_max_ratio --> <cache_size_to_ram_max_ratio replace="replace">0.2</cache_size_to_ram_max_ratio> <!-- https://clickhouse.com/docs/en/operations/server-configuration-parameters/settings#max_server_memory_usage_to_ram_ratio --> <!-- On hosts with low RAM and swap, you possibly need setting max_server_memory_usage_to_ram_ratio larger than 1. --> <max_server_memory_usage_to_ram_ratio replace="replace">2</max_server_memory_usage_to_ram_ratio> <!-- Reduce the mark cache size to 1GB (default is ~5GB) --> <!-- <mark_cache_size>1073741824</mark_cache_size> --> </clickhouse>

Working?

Somewhat. This is at least not dying immediately like I have had before, and I haven’t had to use mark_cache_size yet. Will keep an eye on this to see how it goes!